Everyday examples of commercial AI applications are ubiquitous in several sectors and are here to make our lives easier.The medical field is also eager to benefit from this revolution. Image analysis for automated diagnosing, AI-powered devices to assist surgeries or the ability to predict patient outcomes are some examples of what AI could bring to the table in the upcoming years. Patient Mortality prediction is one of the field that can be benefited from AI approach.

Getting Data

After several sleepless, red bull-fueled nights researching about the topic I came across the MIMIC III dataset. This gift of god is an extensive database containing de-identified data aboout patients who stayed within the intensive care units at Beth Israel Deaconess Medical Center, Boston, Massachusetts, between 2001 and 2012. Patient’s vital signs, lab tests results, medical procedures, drugs delivered, transcriptions of medical staff notes and demographics data, are found amongst many other useful features.

In order to build a machine learning model. The following features were extracted from the database:

- Lab tests: Blood urea nitrogen, Platelet count, Hematocrit, Potassium, Sodium, Creatinine, Bicarbonate, White blood cells count, Glucose and Albumin.

- Vital signs: Heart rate, Respiratory rate, Systolic Pressure, Diastolic pressure, Temperature, Oxygen saturation.

- Administrative data: Admission medical service, Surgery/No Surgery undergone, ICU length of stay, total length of stay, OASIS, SAPS and SOFA severity scores, time under mechanical ventilation.

- Demographic info: Age, gender, marital status, religion and ethnicity.

Define Goal:

Since we are trying to predict the motality of patients. It can be comvert into a binary classification problem. Our main goal will be to predict which group does a patient belong to

Cleaning and Prepare Data

There are many outliers and imperfections are present in the data. Measuring errors, human mistakes, or just data complexities and imbalances make necessary a preprocessing step in order to feed the data to a model.

So to make the data usable, outliers outside the 95% percentile were excluded and categorical features with many imbalanced classes were grouped into meaningful group. In addition, all missing value is imputed with MICE method, all categorical feature has been one encoded. Scaling data to the same range can also boost the convergence of the neural network.

Exploratory analysis

Demographic feature exploration

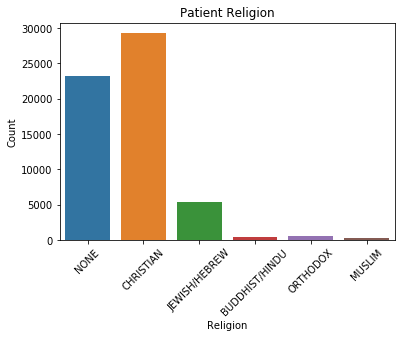

Religon

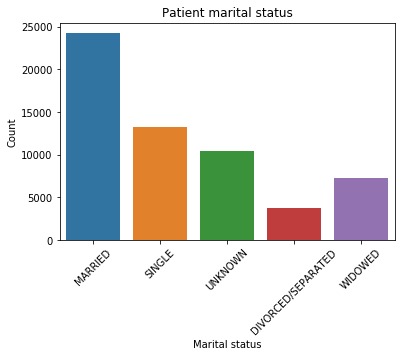

martial status

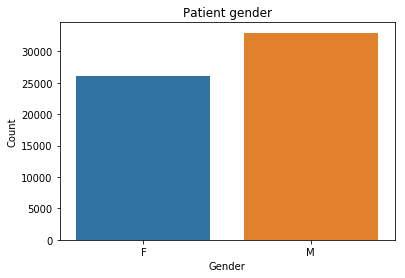

Sex

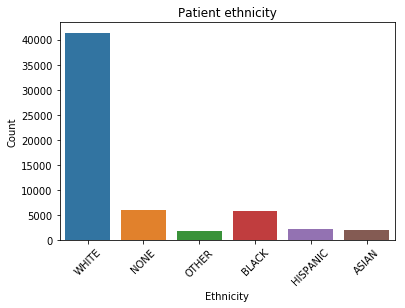

Race

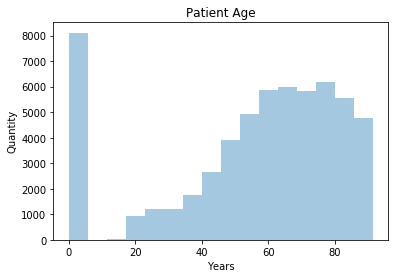

Age

From the above plot we can clearly see that most of the patients are white, male, married and with a mean age of 60. We also have to be careful the imbalnced issue within the dataset.

Basic Neural Network

A simple, basic, fully connected neural network with four layers, and four neurons per layer is implemented using Keras.

def train_neural_network(self, X_train, Y_train, X_test, Y_test, params):

print(params);

n_layers = params['n_layers'];

n_neurons = params['n_neurons'];

batch_size = params['batch_size'];

epochs = params['epochs'];

optimizer = params['optimizer'];

model = Sequential();

for i in range(n_layers):

model.add(Dense(n_neurons, activation='relu', input_shape=(101,)))

model.add(Dense(3, activation='softmax'));

model.compile(loss='binary_crossentropy',

optimizer=optimizer,

metrics=['accuracy', 'mse'])

model.fit(X_train, Y_train,epochs=epochs, batch_size=batch_size, verbose=1);

#Evaluating model

Y_pred = model.predict(X_test);

Y_train_pred = pd.get_dummies(model.predict(X_train).argmax(axis = 1));

Y_test_pred = pd.get_dummies(Y_pred.argmax(axis = 1));

#Train and test accuracies

train_accuracy = accuracy_score(Y_train, Y_train_pred);

test_accuracy = accuracy_score(Y_test, Y_test_pred);

f1_score_model = f1_score(Y_test_pred, Y_test, average = 'samples');

loss, accuracy, mse = model.evaluate(X_test, Y_test,verbose=0)

roc_auroc = roc_auc_score(Y_test, Y_pred);

print('TRAIN ACCURACY:',train_accuracy, 'TEST ACCURACY:',test_accuracy,'F1 SCORE:', f1_score_model,'LOSS:', loss, 'MSE:',mse, 'AUROC:', roc_auroc);

return model;

After training the data, This basic neural network is able to achieve an AUROC score of about 0.65, with accuracy around 0.6. While not an astounding result, it shows that the neural network is able to extract relevant information from the data in order to predict patient mortality.

Network Tunning

the network should be tuned to increase its performance in order to make better predictions. Predictive power is determined by several factors, mostly network parameter tuning. Tuning is performed in an iterative manner, by trying out several configurations until we find an optimal one that maximizes accuracy. The following parameters were tuned:

- Number of hidden layers

- Number of neurons in each layer

- Epoch

- Batch Size

- Optimization algorithm

Three main tuning strategies can be used to accomplish this task. The most time consuming, Grid Search, involves trying out all possible combinations of parameters. This is often unfeasible in complex scenarios, as the combinations may easily exceed 10k, which given a training time of about 5 mins would mean that we need to wait for months to find the optimal parameter configuration

An alternative to Grid Search is Random Search, which tests a predefined number of random configurations, maybe 50 or 100, and picks the best one.

After 50 iterations and around 3.5h, the optimal parameters are gifted to us: 6 hidden layers, 8 neurons per layer, a batch size of 50, epoch of 25 and Adam algorithm as optimization function.

Network Evaluation

Using the optimal parameters obtained in the previous step, the network is trained and its performance is evaluated. We get an overall AUROC score of 0.89.

Precision and recall are evaluation metrics that account for positive and negative predictive power, respectively. Both are combined in the F1 Score, which is computed as their harmonic mean. F1 scores achieved support the conclusions obtained by analyzing AUROC scores.

Since our training accuracy is 65.6% and testing accuracy is 63.2%. The similarities between these two value show that our model does not overfit.

Future Improvement

There are still some improvements can be made to boost the model’s performance and achieve better prediction capabilities.

For example, we can add more features such as the transcription of medical notes from the patient. THese notes often include patient’s medical history. Trying out different neural network architecture may also be helpful.